AI Coding Agent Pricing

TL;DR >> Current AI coding agents have misaligned pricing—users pay for agent inefficiencies and over-iteration. Credit burn rates are unpredictable and scale with agent behavior, not user value. Solutions include fair-use models, temporal arbitrage, outcome-based pricing, and hybrid local/remote approaches. <<

# The Credit Burn Problem

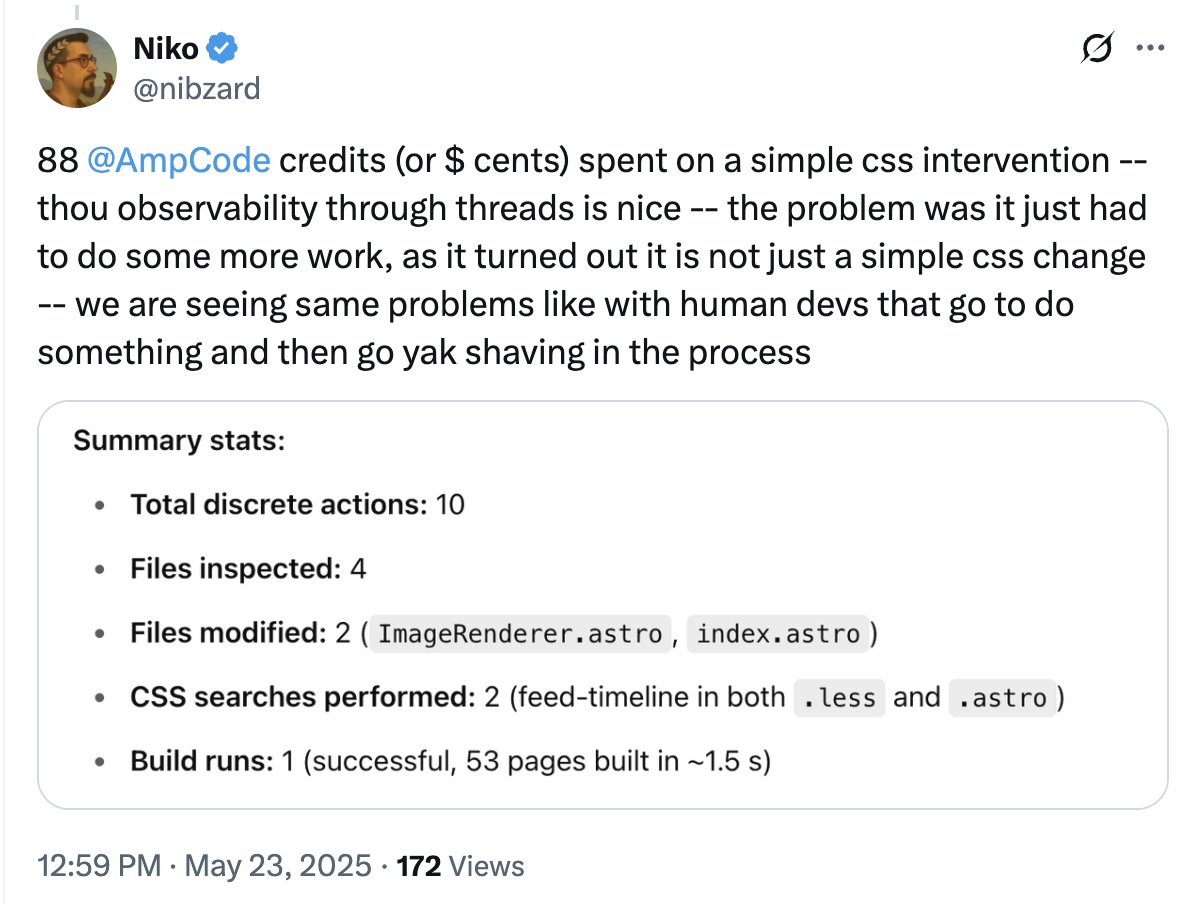

My recent experience with AMP illustrates a fundamental pricing problem. After bootstrapping an Astro project with pnpm create astro@latest and generating specifications through OpenAI’s o3 model, I let AMP implement the spec. The results were impressive enough that I immediately purchased credits after exhausting the free tier. However, this revealed how rapidly credits disappear.

AMP operates on a credit system covering all cost-incurring operations: web searches, LLM inference, and tool usage. While they claim to pass through costs without markup, the burn rate is concerning. The core issue is misaligned incentives—agents make decisions about tool calls and iterations, but users bear the financial consequences.

Cursor takes a different approach, charging per LLM request regardless of token consumption, with token-based pricing only for their premium MAX options using cutting-edge models.

My experience with Claude Code wasn’t cheap—but when viewed through the lens of value delivered, the pricing starts to make sense. Compared to hiring a junior developer (and skipping the intermediate step of translating requirements), the efficiency gains become clear. Even with top-tier, SOTA models, the cost to ship a significant feature ranged from $20 to $200—surprisingly reasonable when measured against actual output.

This misalignment creates several problems:

- Agents over-iterate by design, exploring multiple solution paths

- Agents tend to create slop and increase loc

- No built-in incentive for efficiency optimization

- Unpredictable costs that scale with agent behavior rather than user value

- Users effectively subsidize AI system learning curves

The user experience suffers when pricing becomes the primary selection criterion for AI agents. The ideal solution would involve outcome-based pricing or better alignment between user intent and resource consumption. This mirrors challenges with human developers, where salary costs don’t always correlate with output quality.

# Market Forces at Play

The current race-to-the-bottom pricing, with everyone claiming “cost pass-through,” isn’t sustainable long-term. Once VC-subsidized market prices end, successful companies will need to:

- Optimize AI efficiency for better margins

- Create differentiated value justifying premium pricing

- Build competitive moats through specialized domain knowledge and proprietary models (as seen with Vercel’s v0 model for Next.js)

# Alternative Pricing Models

Fair-Use Architecture

Drawing from telecommunications models that mirror actual usage patterns:

- Base allocation: X successful completions included monthly

- Overage tiers: Progressive volume discounts

- Throttling options: Reduced speed/capability instead of hard cutoffs

- Rollover credits: Unused allocation carries forward, encouraging loyalty

This approach solves the “agent inefficiency tax” by providing predictable costs for normal usage while charging premiums only for extraordinary consumption.

Temporal Arbitrage Pricing

Batch processing and off-peak inference create interesting opportunities, especially with remote agents like Augment Code’s recent preview. Background agents could handle non-urgent tasks during low-demand periods.

Priority-based tiers:

- Instant: Real-time processing at premium rates

- Fast: 5-10 minute queue at standard rates

- Batch: Hours/overnight processing with 50-70% discounts

- Background: Multi-day large refactors with 80%+ discounts

Hybrid Local/Remote Pricing

As edge computing capabilities improve:

- Local-first: Smaller models run locally, complex tasks use cloud resources

- Confidence-based routing: High-confidence completions stay local

- Progressive enhancement: Start local, escalate to cloud when needed

- User-controlled: Explicit triggers for expensive model usage

Outcome-Based Evolution

Pure outcome pricing will likely start narrow and expand:

- Feature-complete components: Fixed price per working component

- Bug fixes: Flat rate per successfully resolved issue

- Performance improvements: Success fees based on measurable gains

- Full features: Story-point or t-shirt sizing with guaranteed completion

This resembles open-source bounty models and bug-hunting reward systems.

Caching Economics

An underexplored area with significant potential:

- Pattern libraries: Pre-computed common implementations

- Project fingerprinting: Similar codebases share cached solutions

- Community effects: Popular patterns become cheaper over time

- Negative pricing: Users earn credits for contributing to cache hits

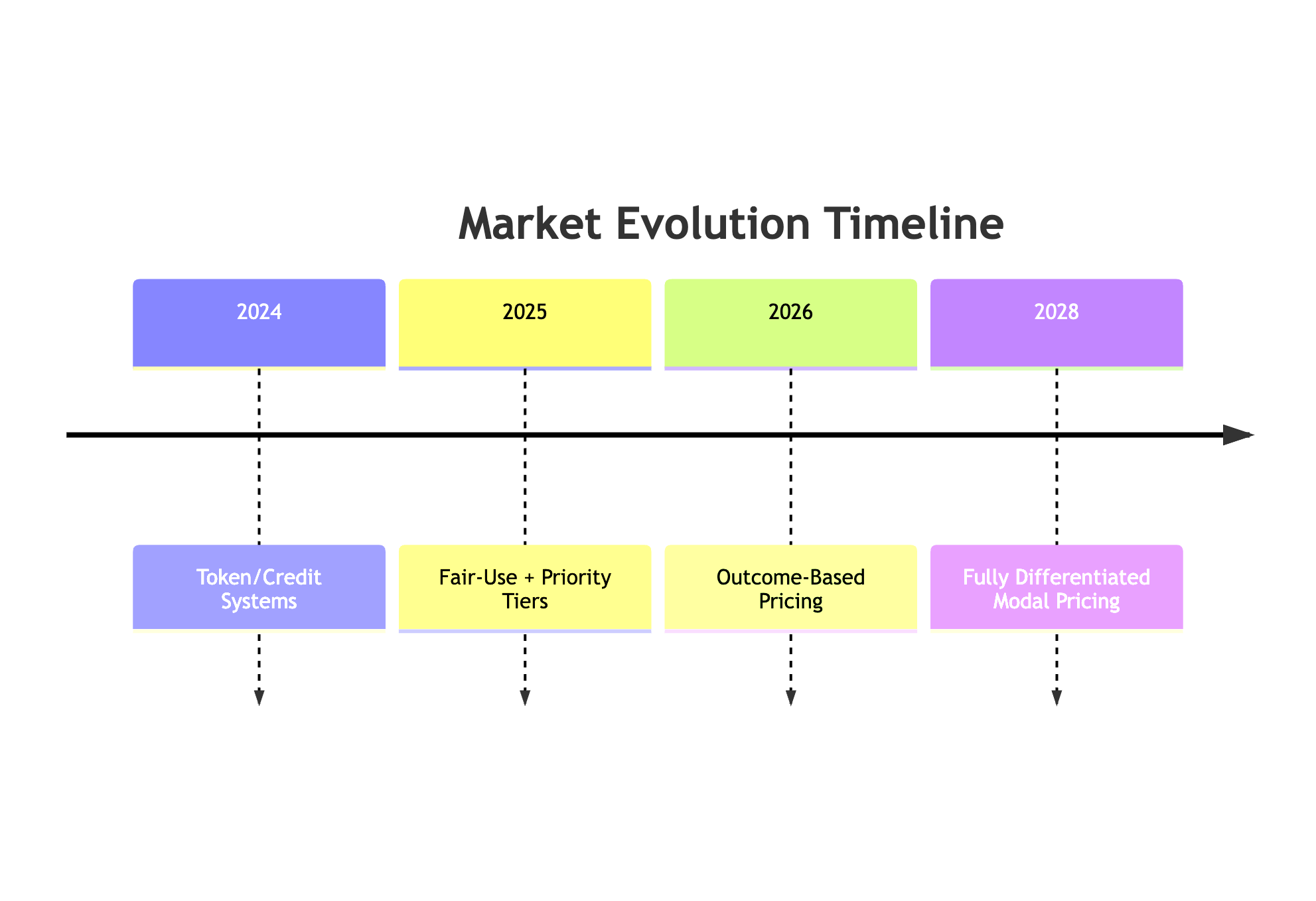

# Market Evolution Timeline

The progression will likely follow this path:

- Current state: Crude token/credit systems

- Next 12 months: Fair-use models with priority tiers emerge

- 2-3 years: Outcome-based pricing becomes standard for defined tasks

- 3-5 years: Fully differentiated pricing across different modalities

Success will belong to whoever first creates a pricing model that feels “fair” to developers while capturing the value being generated.

The interesting question remains: How quickly must local model capabilities improve before hybrid local/remote pricing becomes viable?